- #WEBSCRAPER CHROME FILL OUT FORMS HOW TO#

- #WEBSCRAPER CHROME FILL OUT FORMS CODE#

- #WEBSCRAPER CHROME FILL OUT FORMS MAC#

#WEBSCRAPER CHROME FILL OUT FORMS CODE#

The “Function code” section lets you write the code in the inline text editor, upload a ZIP package, or upload from S3. A Selenium scraper is big, because you need to include a Chrome browser with it. But first, let’s set up some environment variables. Your function is set to run at a scheduled time.Ĥ. My expression therefore needs to be: cron(0 3 * * ? *)Ĭlick on “Add” and that’s it. In Lambda, you need to enter the time in GST, so that’s 3 AM. I want to scraper to run at 11 PM EST every night.

Now you have to write the weird cron expression that tells it when to run. Give it a description so you know what it’s doing if you forget. On the “add trigger” menu on the left, choose CloudWatch Events.Ĭlick on “Configuration required” to set up the time the script will run. Since we want this to run on a schedule, we need to set up the trigger. Here is where you set up the triggers, environment variables, and access the logs. Under “Policy templates” choose “Basic Edge Lambda permissions”, which gives your function the ability to run and create logs. For now give this role a name like “scraper”. Roles define the permissions your function has to access other AWS services, like S3. Under “Role”, choose “Create new role from template”. Give it a function name, and choose Python 3.6 as the runtime. Go to AWS Lambda, choose your preferred region and create a new function.Ģ. It lets you write or upload a script that runs according to various triggers you give it. For example, it can be run at a certain time, or when a file is added or changed in a S3 bucket.ġ. Lambda is Amazon’s serverless application platform.

I simply modified it a bit to work for me.ĭownload their repo onto your machine. They did most of the heavy work to get a Selenium scraper using a Chrome headless browser working in Lambda using Python. This guide is based mostly off this repo from 21Buttons, a fashion social network based in Barcelona. And it costs pennies a month, even for daily scrapes. You just need to upload your scripts and tell it it what to do. You don’t have to set up the software, maintain it, or make sure it’s still running.

How can something be serverless if it runs on an Amazon server? Well, it’s serverless for you. I wanted to work in Python, which Lambda also supports. But the demo I saw, and almost all the documentation and blog posts about this use Node.js. I wanted my script to be run from a server that never turns off.Īt the NICAR 2018 conference, I learned about serverless applications using AWS Lambda, so this seemed like an ideal solution. They can get unplugged accidentally, or restart because of an update.

#WEBSCRAPER CHROME FILL OUT FORMS MAC#

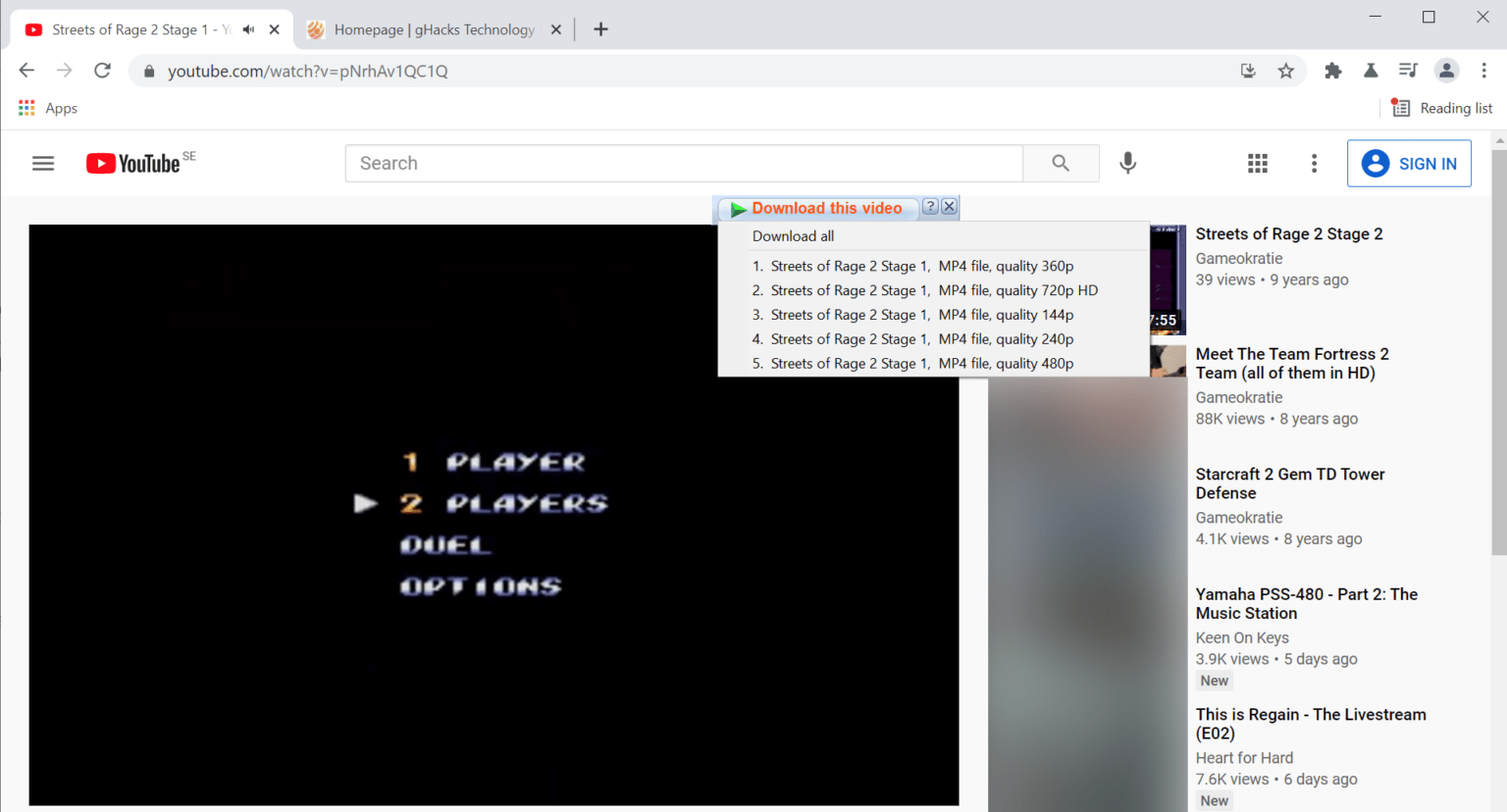

I could have run the script on my computer with a cron job on Mac or a scheduled task on Windows.īut desktop computers are unreliable. I wanted to scrape a government website that is regularly updated every night, detect new additions, alert me by email when something is found, and save the results. With this post, I hope to spare you from wanting to smash all computers with a sledgehammer. I recently spent several frustrating weeks trying to deploy a Selenium web scraper that runs every night on its own and saves the results to a database on Amazon S3. According to this GitHub issue, these versions work well together: What did work was the following:ĮDIT: The versions above are no longer supported. It’s based on this guide, but it didn’t work for me because the versions of Selenium, headless Chrome and chromedriver were incompatible.

#WEBSCRAPER CHROME FILL OUT FORMS HOW TO#

TL DR: This post details how to get a web scraper running on AWS Lambda using Selenium and a headless Chrome browser, while using Docker to test locally. This post should be used as a historical reference only. And here’s a list of useful pre-packaged layers. Here’s a post on how to make such a layer. This post is outdated now that AWS Lambda allows users to create and distribute layers with all sorts of plugins and packages, including Selenium and chromedriver.

0 kommentar(er)

0 kommentar(er)